Set up Kubernetes cluster with Ansible (Part 2)

Welcome back. If you made it here, I’m glad you are finding this helpful. In the last article, we set up the required software packages on all nodes in the cluster. In this article, we will focus on setting up the control plane and the worker nodes. I have two different playbooks (control-plane-playbook.yml) and (worker-nodes-playbook.yml) because the set up is different. Let’s start with the control plane (aka the brain and coordinator of the cluster).

We need to do a few things in order to set up the control plane:

- Run a series of checks to make sure the nodes and software packages meet the minimum requirements for the cluster.

- Generates a self-signed Certificate Authority in

/etc/kubernetes/pki - Generate kubeconfig files in

/etc/kubernetes. The kubeconfig file has information on the cluster and user certificate that an API client likekubectlcan use to communicate with the cluster. Theadmin.conffile in particular is what we will copy to our userdevhome directory and also to my remote machine. - Install static pod manifests in

/etc/kubernetes/manifestsfor the API server, controller-manager and scheduler components. Thekubeletdaemon watches this directory to know which pods to create on that specific node. Static pod manifests are helpful for running mission critical pods on a node without going through the API server. Hint: this is a good use case for monitoring and creating control plane components. - Taints control plane node so that no pods can be scheduled there

- Install CoreDNS server and kube-proxy

- Other stuff related to generating config and bootstrap tokens for notes to join the cluster

It sounds like a lot to do manually. Thankfully, kubeadm init does this in a single command.

---

- hosts: azure_control_plane

become: true

tasks:

- name: Set up kubeadm

shell: |

kubeadm config images pull --cri-socket unix:///run/containerd/containerd.sock --kubernetes-version v1.29.3

- name: Kubeadm initialize

shell: |

kubeadm init -v=5 --ignore-preflight-errors=NumCPU,Mem --pod-network-cidr=10.244.0.0/16

In the command below, note I am omitting the check for the number of cores and memory with the --ignore-preflight-errors=NumCPU,Mem option. Kubernetes requires a minimum of 2 gb of RAM and 2 cores. When I was testing this out in Azure, I used a standard b1s sku which is a 1 core and 1 gb machine and therefore, the preflight checks would have failed without this option.

Next we copy the admin.conf kubeconfig file to our user’s .kube folder in their home directory.

- name: Copy the kubeconfig file to the user's home directory

shell: |

mkdir -p /home/{{ ansible_user}}/.kube

cp -i /etc/kubernetes/admin.conf /home/{{ ansible_user}}/.kube/config

- name: Change the ownership of the kubeconfig file

file:

path: /home/{{ ansible_user }}/.kube/config

owner: "{{ ansible_user}}"

group: "{{ ansible_user }}"

mode: '0600'

Note the use of ansible_user fact variable. If become: true is set at the start of the playbook, then ansible_user might be root instead of the user dev we are running the playbook as. If that is the case, just add a variable in the inventory file for that host group like this:

[azure_nodes:vars]

ansible_user=dev

The next step is to install a pod networking plugin for the CNI we installed in the last playbook. I am using flannel but you can also use Calico or any other CNI implementation. Note that when I ran kubeadm init, I passed --pod-network-cidr=10.244.0.0/16 as the cluster network address. This is because Flannel uses this by default. If you want to use a different pod network, then download and modify the flannel yaml file from GitHub and apply that file instead.

- name: Apply flannel pod networking

shell: |

kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

The control plane should be working as expected now. I’ll admit it is very incovenient to always have to log into the control plane in order to run kubectl for whatever reason. It would be nice to monitor the kubernetes cluster from the remote machine running the Ansible playbook. To achieve this, add a final task to copy the admin.conf file to your local machine running Ansible like this.

- name: Copy admin kubeconfig from control plane to local machine

fetch:

src: /etc/kubernetes/admin.conf

dest: kubeconfig-admin.conf

flat: yes

Now we can run the control plane notebook

ansible-playbook --private-key=~/.ssh/id_azure -u dev -i inventory.ini control-plane-playbook.yml

You should see the kubeconfig-admin.conf file in the same directory you’re running the playbook from. Copy this file into your $HOME/.kube/config file. If you have other clusters listed, you’ll have to manually merge the files otherwise you can also modify the playbook to create the file there automatically.

The last step is setting up the worker nodes to join the cluster. I will paste the entire playbook below as it fairly short but not as straighforward so I’ll explain. This is in a different file called worker-nodes-playbook.yml

---

- hosts: azure_control_plane

become: true

tasks:

- name: Get token for joining worker nodes

shell: |

kubeadm token create --print-join-command

register: kubeadm_join_token_cmd

- name: Print the join command

debug:

var: kubeadm_join_token_cmd.stdout_lines[0]

- name: Set the join command as a fact

set_fact:

k8_worker_join_command: "{{ kubeadm_join_token_cmd.stdout_lines[0] }}"

- hosts: azure_worker_nodes

become: true

tasks:

- name: Join worker nodes to the cluster

shell: |

{{ hostvars[groups["azure_nodes"][0]]["k8_worker_join_command"] }}

For the worker nodes to join, we need to get a boostrap token from the control plane node and use it on all the worker nodes to join the cluster. The sequence of tasks on a node is called a play in Ansible and the challenge here is we need to run a command to get the token on the control plane node and copy the output to the other nodes. We essentially need to copy variables from one play to another. So how does Ansible solve this problem? You might have guessed - using facts. We briefly talked about facts in part 1 but what I didn’t mention is we can set user defined key-value pairs and associate it with a host.

The kubeadm token create command will output the command we need to run on the worker nodes. We use the register directive to capture the output of the shell command in kubeadm_join_token_cmd variable. The next task uses the debug module to print the variable to our screen (just for debugging purposes). The register variable is ephemeral and only available within that play so we save the value using set_fact module into a key called k8_worker_join_command. In the next play (which runs on the azure_worker_nodes hosts), we retrieve the fact like this hostvars[groups["azure_nodes"][0]]["k8_worker_join_command"]. Here is a breakdown of what this does:

hostvars: Ansible’s way of retrieving facts from a hostgroups["azure_nodes"][0]: Rememeber in part 1, the control plane and worker nodes were part of a group calledazure_nodesin the inventory file.groups["azure_nodes"]returns a list of nodes and[0]selects the first one (which is our control plane node).

The combination of both items above gives us access the the fact dictionary of the control plane where we can retrieve the k8_worker_join_command key.

That’s it for the worker nodes. Run the playbook with the command ansible-playbook --private-key=~/.ssh/id_azure -u dev -i inventory.ini -f 7 worker-nodes-playbook.yml

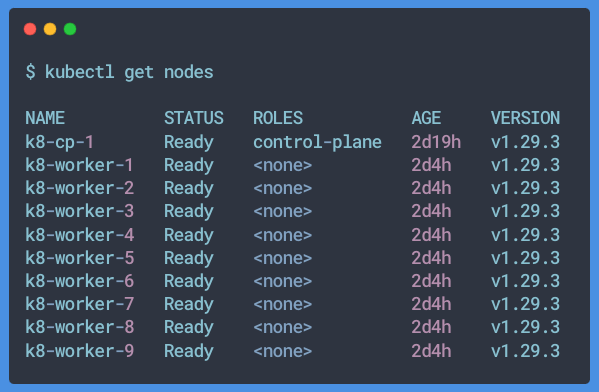

Our kubernetes cluster is now up and running!

Previous article in series: Set up Kubernetes cluster with Ansible (Part 1)